Breaking All the Way Out – Idea & Composition

by Maximilan Malek and Ralf Jung

You are responsible for what you create. It may come back to you at any point.

Our video plays with the idea of what would happen if virtual creations escape into the real world. As programmers, how far does our responsibility go for the things we release into the wild?

Conceptual Idea

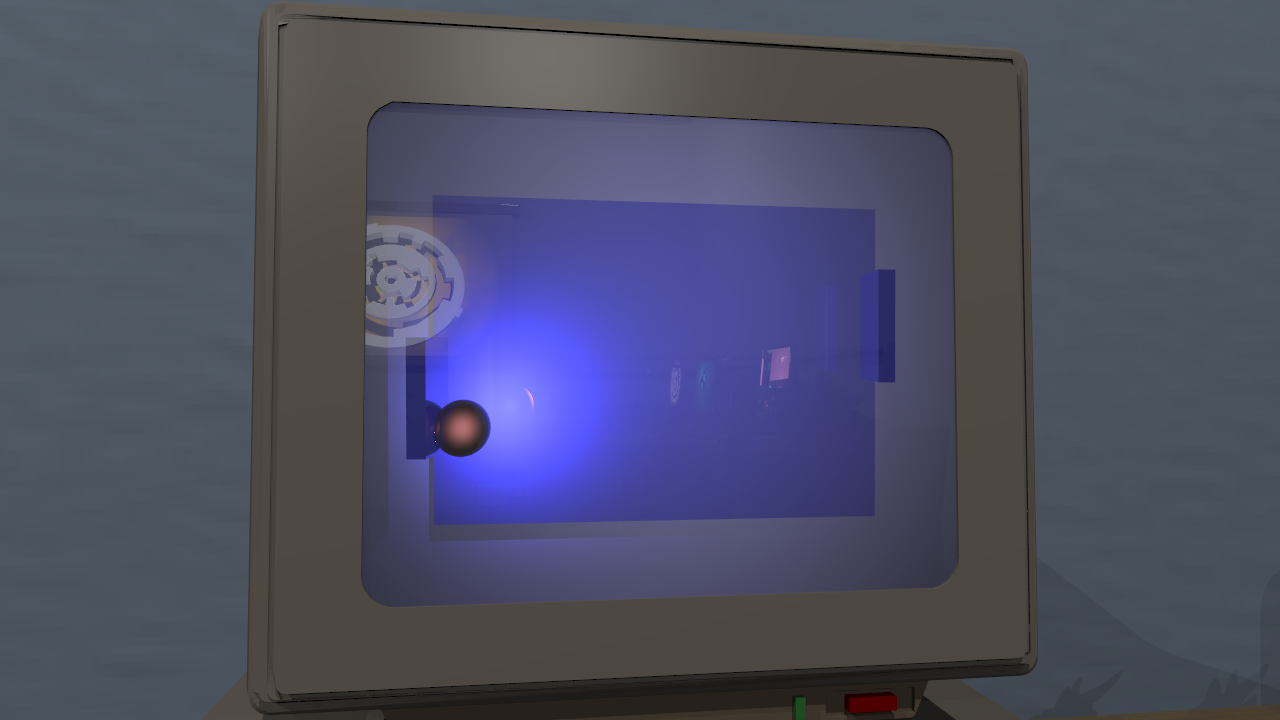

The initial idea was to show something breaking out of a PC screen and wreaking havoc in its environment. We chose Pong, which is widely considered to be the first successful video game. While pong may be obsolete by today's standards, it has its own charm and is a well known and recognized cult symbol. Even more important, it is considered completely harmless. Having a Pong game go wild and the ball coming out of its little world was then chosen to be the central, unexpected event.

As we were sort of forced into this fate throughout the course, we chose the secondary theme to be "computer graphics".

The "viewer" of the scene is a programmer, working deep into the night trying to get his work done in time (which does not work out, as Windows of course crashes again).

As a consequence, we went for a hand-held camera style: First-person perspective, one continous path, sometimes clumsy and shaky.

This is emphasized by sitting in front of a screen for the first ten seconds of the video.

We also added some references to recursion, which plays an important role in raytracing in particular and in computer science in general.

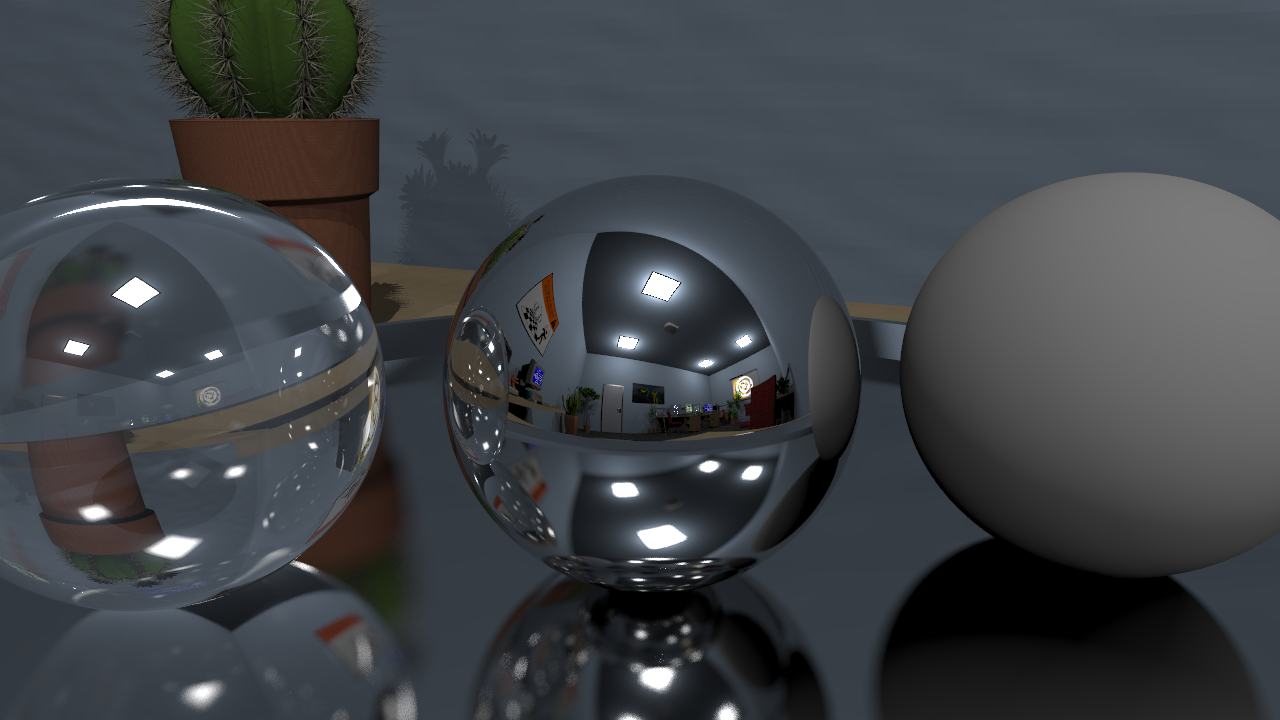

The "computer graphics" topic gave us the opportunity to show all the optional features we implemented during the semester. Instead of cluttering the scene with objects that would feel wrong in the given context, we decided to have the situation located in a "computer graphics design lab". Then we could show various recursively raytraced mini-scenes on screens spread throughout the room. This turned out to be the perfect addition for the pong scene, as this was also raytraced on a PC screen, so it fit well into the concept. These scenes can also be seen mirrored in the screens showing other scenes, so we have recursion on several levels here.

Just showing screens with stuff would be boring, and a pong ball popping out is not actually that interesting. Thus we had to add some action.

The screen's glass shattering into pieces, and the ball flying around add the motion;

while the burning screen and volumetric fog add emphasis and a fiery "aura".

Originally, we wanted to destroy objects in the office, but this turned out to be too hard to pull off in the given time.

Instead, we let the ball escape through the ceiling and cause an eerie situation this way:

As soon as the danger seems to be over, it turns out that there is no world outside, physics do no longer apply,

there is just a white light that pulls the viewer up, and a projector that mysteriously keeps functioning.

The title refers to how the Pong ball breaks out of the realm it is supposed to reside in, twice: First into the real world, and then further to... something that's beyond it. At the same time, it is a reference to Breakout, another old classic computer game which is about breaking the ceiling using a ball. It's also something like a single-player version of Pong.

Additionally, the scene contains references to things that inspired us:

- The mandatory teapot

- The Stanford standard models (bunny, dragon, armadillo)

- The Commodore64, the most successful and iconic home computer of all times — even today, people still break world records with it

- The Metroid game series by Nintendo

- M.C. Escher, famous for impossible scenes, recursive drawings and accurate depiction of reflection and refraction

- The logo of the Revision Demoparty, which is an annual Demoscene event in Saarbrücken and attracts sceners from all over the world

The following demoscene productions provided further inspiration, and they influenced some design choices of our video:

- Heaven Seven by Exceed

- Five faces by Fairlight & Cloudkicker

- Debris by Farbrausch

- Rupture by ASD

Scene Composition

The composition of the scene was performed with an editor mode we wrote inside our raytracer. The motivation for this mode was simple: Neither of us was familiar with Blender when we started working on this project. And no matter what we did in Blender, it would never match the look of the same scene in our raytracer. Thanks to adaptive subsampling, our raytracer was fast enough to be used in real-time, at least with small resolutions. The interactive camera control, object editor and so on were all implemented in Lua, based on interfaces to the C++ side of the raytracer. This allowed us to prototype scenes much faster, and to keep the performance-critical part of the raytracer clean and independent from the higher-level tools we built on top of it.

For some time, we fancied writing a real-time demo, but it turned out the techniques we wanted to employ (especially fog) are way too slow to achieve a decent resolution and framerate, no matter how much we optimized our code. Hence we decided to go with a pre-rendered video instead, still making use of the fact that the individual images were rendered fairly fast.

For the models, we simply searched the web and helped ourselves from the various portals offering free 3D objects. You can find links to all our sources on the References page. After adding an object to the scene, we could freely move it around, scale it and rotate it in our editor, immediately observing the raytraced results of these modifications. The configuration is ultimately serialized to a simple Lua script, which will later be executed when the scene is being reconstructed. This also allowed us to change material properties and so on easily, even after the objects were already placed. Thanks to a model cache, reloading the Lua scripts did not require reloading all the models from disc, so changes to the programming side of a scene could also be previewed quickly.

The external programs we used were GIMP, to edit a few textures, and Blender, to convert and adjust some of the models.

Additionally, a 3ds Max trial version was used to export models from .max format so that they could be imported in Blender.

For the final video (at a resolution of 1280x720 pixels and 30 frames per second), we used 16 machines which overall needed around 350 hours of computation for a total of 6600 frames. The individual images took between 30 seconds and 30 minutes to compute, depending on frame complexity and CPU speed. The total number of triangles in the scene is above 1.3 million. The first part of the video, until the screen explodes, was rendered with eight samples per pixel. Because fog is so expensive, we rendered the second part with just four samples per pixel — thanks to low discrepancy sampling, this still yields acceptable visual quality.

At this point, thanks to Alexander Steigner & Jan Martens for providing additional CPU power.